Sliding Shapes for 3D Object Detection in Depth Images

Abstract

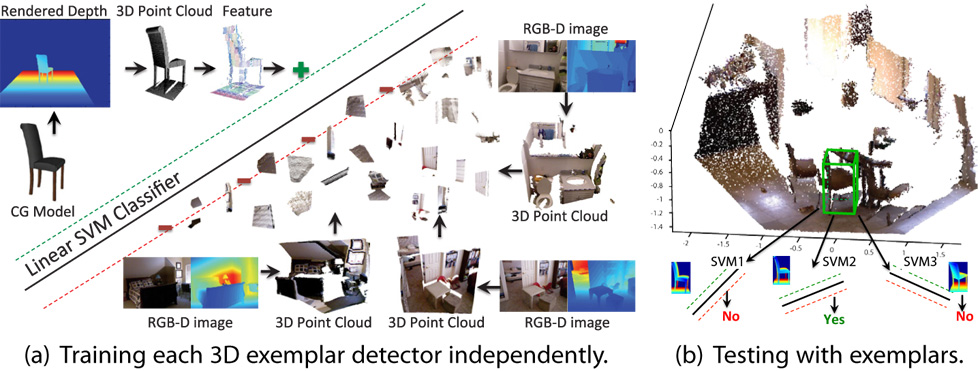

The depth information of RGB-D sensors has greatly simplified some common challenges in computer vision and enabled breakthroughs for several tasks. In this paper, we propose to use depth maps for object detection and design a 3D detector to overcome the major difficulties for recognition, namely the variations of texture, illumination, shape, viewpoint, clutter, occlusion, selfocclusion and sensor noises. We take a collection of 3D CAD models and render each CAD model from hundreds of viewpoints to obtain synthetic depth maps. For each depth rendering, we extract features from the 3D point cloud and train an Exemplar-SVM classifier. During testing and hard-negative mining, we slide a 3D detection window in 3D space. Experiment results show that our 3D detector significantly outperforms the state-of-the-art algorithms for both RGB and RGBD images, and achieves about x1.7 improvement on average precision compared to DPM and R-CNN. All source code and data are available online.

Paper

-

S. Song, and J. Xiao.

Sliding Shapes for 3D Object Detection in Depth Images

Proceedings of the 13th European Conference on Computer Vision (ECCV2014)

Oral Presentation

Video (Download raw video here)

Talk

|

Oral presentation at the main conference: Powerpoint slides (95MB) and PDF slides (50MB). Video recording of the talk: http://videolectures.net/eccv2014_song_depth_images/. |

Source Code and Data

- slidingShape_release.zip (279 MB): This file contains source code, and example demo to run the code

- data.zip (16.59GB): This file contains percompute features for RMRC 3D object detection dataset (a subset of NYUv2 dataset more infor can be find here), and all computer graphic models (.off) used to train the models.

- Pretrained models: Models trained on RMRC and Models trained on SUNRGBD

- 3D Detection bounding boxes result: DetectionMatNYU.zip (15MB) on RMRC. detectionSUNRGBD.zip (15MB) and Orientation (3K) SUNRGBD .

- slidingshape_feature.zip (12MB): This is a stand alone code to compute 3D feature from one depth map.

Other Materials

- supp.pdf: Due to page limit, we moved a lot of technical details to this file. This file also contains more results and comparison.

- resize_slidingshape_poster_eccv.pdf: Poster.